A glimpse of the M2DC cloud computing appliance

Nov. 13, 2018, 8:06 a.m.

Authors: Ariel Oleksiak, Michal Kierzynka (PSNC), Dejan Štepec (XLAB)

Applications dealing with cloud computing are used by all sectors of industry, and their advantages affect the daily life of all of us. A large part of IT workloads moved to both private and public clouds, and that is still growing. In consequence, clouds are responsible for significant amounts of a total energy usage by IT.

During recent years a number of technological stacks gained popularity with OpenStack, and container technologies being important part of them. Any advances in software and hardware solutions supporting these technologies have great impact on efficiency, performance and reliability at global scale.

Poznan Supercomputing and Networking Center (PSNC) is one of Polish national computing centres. In addition to HPC infrastructure it provides a variety of services also for commercial customers. They include cloud instances using OpenStack software but also advanced services provided for PSNC customers and partners in the area of education, visualisation, data analytics, and others. These services are more often based on containerised technologies nowadays. For this reason, efficient and cost-effective server solutions supporting these software stacks are of great value for PSNC.

M2DC provides significant benefits by the use of efficient microservers, easy and cost-effective hardware upgrade possibilities and intelligent energy management techniques integrated with OpenStack middleware. In particular, M2DC enables competitive advantage with respect to performance, scalability, efficiency and cost-savings, providing Platform as a Service cloud solutions.

Cloud Appliance is based on the M2DC microserver platform optimised to deliver high efficiency and low Total Cost of Ownership (TCO) for Platform-as-a-Service applications. By the use of microserver approach, preinstalled software stack and integration with Intelligent Power Management, the M2DC Cloud Appliance can deliver even 30% savings in Total Cost of Ownership (TCO) and up to 40% gains in efficiency.

This turnkey appliance includes preinstalled software stack aiming too concentrate on domain problem, instead of on DevOps. In that note, it provides easy deployment of new applications, by adding new docker images on the same platform. This appliance considers the integration of scalable PaaS environment with advanced processing on heterogeneous nodes, tested with machine learning tools running on GPUs. We highlight the flexibility and scalability of this appliance, based on: (i) the multiple configurations of microservers and accelerators; (ii) the new chassis that can be added in a scale-out fashion, and the (ii) architecture of the Cloud Appliance itself. Moreover, it includes the latest hardware, densely packed and optimized for the Web, and additional workloads such as deep learning.

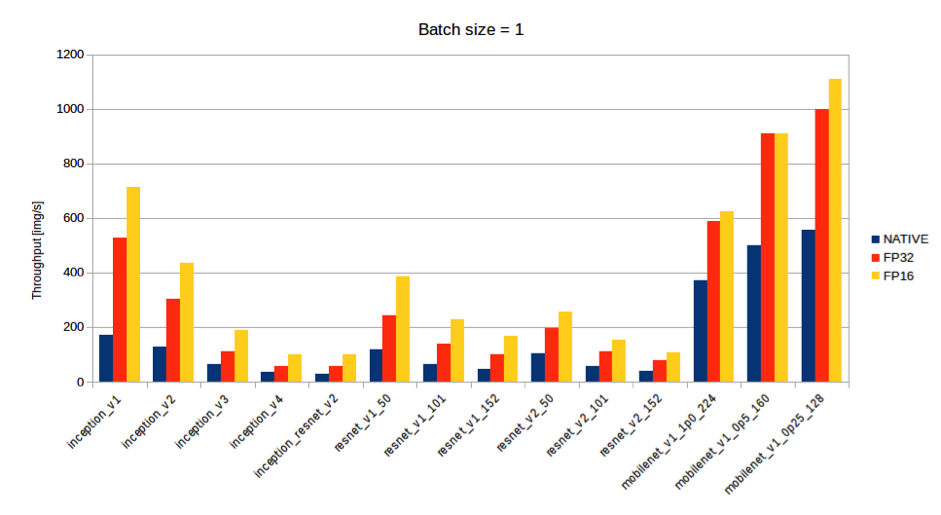

Deep Learning workloads were thoroughly tested out and optimized for the available hardware. We have utilized NVIDIA latest SDKs such as TensorRT for DL inference optimisation. Different well-known classification architectures have been tested. Some of the gains can be visible at the chart below where we can see improvements made using TensorRT NVIDIA SDK, especially when using reduced precision, which is hardware, supported by the latest GPUs and utilized in our Cloud appliance.

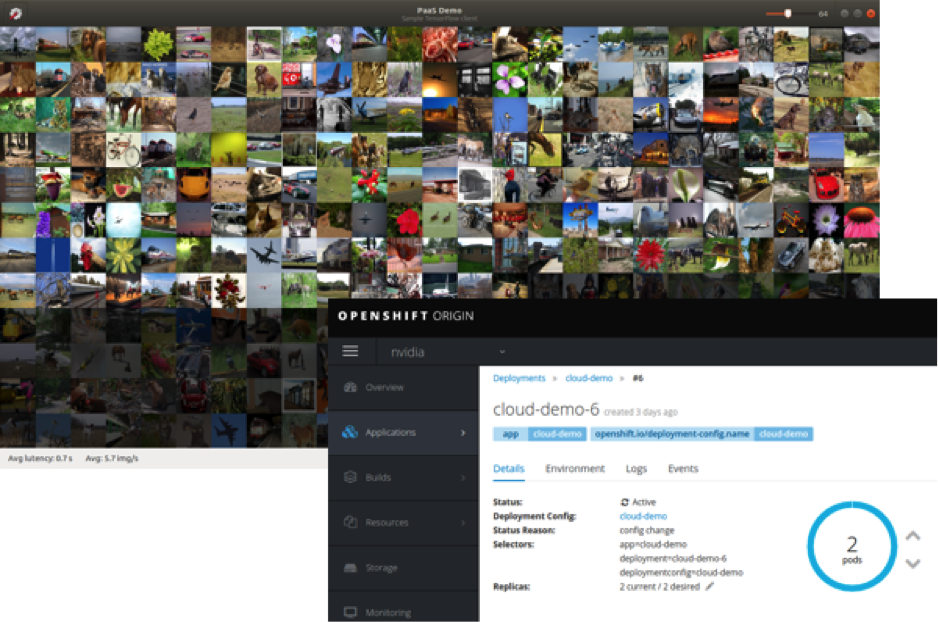

The M2DC team was once again present at the 2018’s edition of the International Conference of High Performance Computing, Networking, Storage and Analysis - known as Supercomputing – this time with a live demonstration of the Cloud Appliance running a distributed deep learning application. The team brought to this event the latest version of the small and big M2DC chassis, system efficiency enhancements and appliances, to demo and discuss those in further detail. The following image shows the demonstration of the distributed deep learning application running on the cloud appliance.

Further steps in the development of the appliance include the integration of ARM CPUs, and the final validation of the whole software and hardware stack by extensive tests and benchmarking. The final step will be adding automated configuration and tuning tools with documentation to enable easy integration into existing infrastructure.

Currently, the Cloud Appliance has been thoroughly tested with deep learning inference services, which are a perfect fit for the Platform as a Service model. One of our future goals is to enable the Appliance to perform also the distributed training, that is training of a deep neural network on multiple nodes. This can be achieved with additional plugins and is expected to provide support for software tools and frameworks like Horovod, TensorFlow, MXNet, PyTorch and Chainer.